|

| i-TREASURES

USE CASE 4: CONTEMPORARY MUSIC COMPOSITION |

The

case study of the contemporary music composition aims to develop

a novel intangible Musical instrument. This digital music

instrument will be a novel Multimodal Human-Machine Interface

for the music composition where natural gestures performed

in a real-world Environment will be mapped to music/voice

segments taking into account the emotional status of the performer.

Thus, ‘everyday gestures’ performed in space or

surface together with the emotional feedback of the performer,

will continuously control the synthesis of music entities.

This

intangible musical instrument will not be only addressed to

experienced performers, musicians, researchers or composers,

but also to users without any specific music knowledge. The

heritage of the classic composers can this way be available

for everyone; it can be better preserved and renewed using

natural body and emotional interactions.

The performing arts combine both the communicational (expressions,

emotions, etc.) and control aspects (triggering actions, controlling

continuous parameters). The performer is both a trigger and

transmitter connecting perception, gesture and knowledge.

A few years ago, the electronic synthesizer was a revolutionary

concept of a new music instrument that was capable of producing

sounds by generating electrical signals of different frequencies

by pianistic gestures performed on a keyboard. Nowadays, the

music production still depends on musical instruments that

are based on intermediate and obtrusive mechanism (piano keyboard,

violin bow etc). Many years of studies are presupposed in

order to obtain a good level to (a) control these mechanisms

and (b) read and comprehend a priori defined musical scores.

This long learning procedure creates gaps between non-musicians

and music. Nowadays, the need of a novel intangible musical

instrument, where natural gestures (effective, accompanying

and figurative) performed in a real-world environment together

with emotions and other stochastic parameters for controlling

non-sequential music, is increasing.

|

|

| UMS

'n JIP's COMMITMENTS |

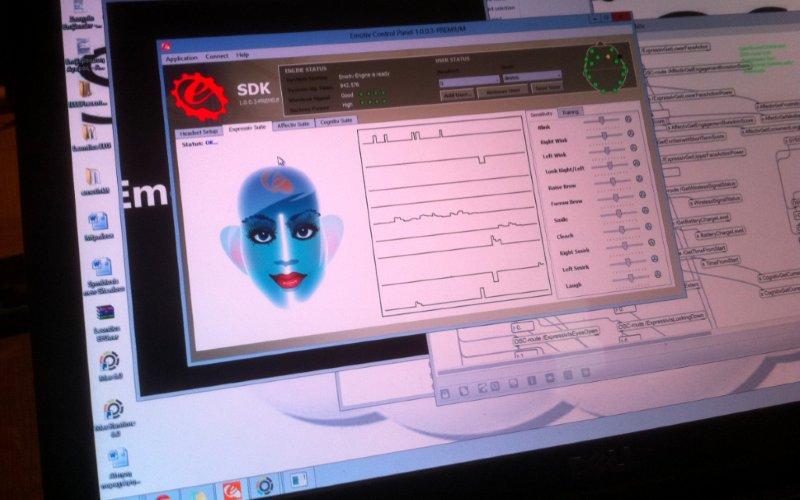

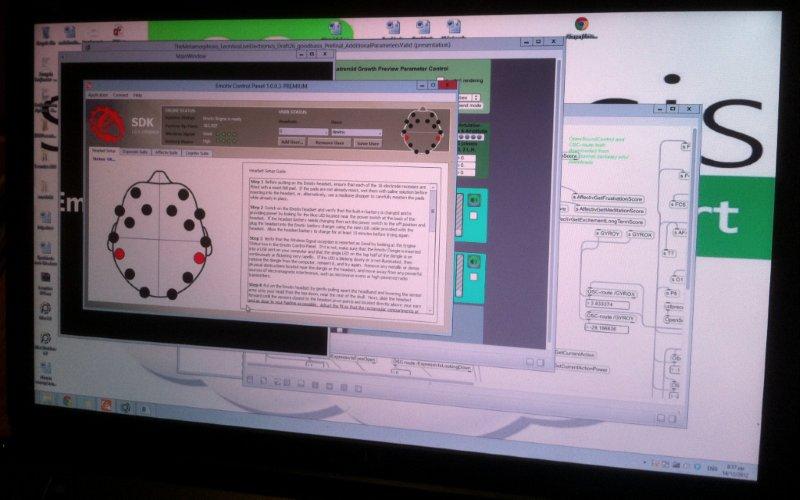

UMS

'n JIP participates actively in the EU project i-Treasures

inspired by the UNESCO 2003 Convention of the Safeguarding

of Intangible Cultural Heritage (ICH). This project focusses

inter alia on capturing and analyzing of ICH using facial

expression analysis, body and gesture recognition, vocal tract

modeling, sound processing, electroencephalography in the

matters of singing, performance and music composition. In

this context UMS 'n JIP will work on an 'Emotional Brain Mapping'.

Its aim is emotional control of musical processes by using

realtime EEG-brainwave analysis. The 'Emotional Brain

Mapping' research results can potentially be applied as following:

UMS

'n JIP participates actively in the EU project i-Treasures

inspired by the UNESCO 2003 Convention of the Safeguarding

of Intangible Cultural Heritage (ICH). This project focusses

inter alia on capturing and analyzing of ICH using facial

expression analysis, body and gesture recognition, vocal tract

modeling, sound processing, electroencephalography in the

matters of singing, performance and music composition. In

this context UMS 'n JIP will work on an 'Emotional Brain Mapping'.

Its aim is emotional control of musical processes by using

realtime EEG-brainwave analysis. The 'Emotional Brain

Mapping' research results can potentially be applied as following:

1. Research & medical application

1.a Integrating the results in learning

programs related to behaviour pathologies to alleviate deficits

of emotional expressivity (e.g. autism)

1.b Using them to eventually reactivate/use

basic/archaic emotional paths with coma patients (awakening)

1.c Enabling emotional communication with

locked-in-patients or similar

2. Music

2.a

Supporting the development of an instrument enabling both

a focussed conciousness and learning of emotional expressions

and its application in contemporary music composition and

performing both traditional and contemporary music

2.b

Applying the scientific results concerning non-verbal communication

for a conscious use of music as a medium of intercultural

communication

3. Communication

Acquire

scientific knowledge concerning non-verbal communication in

different cultural areas and use them to develop both new

methods of communication training and interfaces for intercultural

communication

|

|

UMS

'n JIP beteiligt sich aktiv am EU-Projekt i-Treasures,

das aus der UNESCO 2003 Konvention über die Erhaltung

des Immateriellen Kulturerbes (IKE/Intangible Cultural Heritage

ICH) hervorgegangen ist. Dieses Projekt fokussiert sich unter

anderem auf die Erfassung und Analyse von IKE mittels Untersuchung

fazialer Ausdrucksformen, Körperausdruck und Gestik,

Stimmeinsatz und -Modellierung, Klangbearbeitung und Elektroenzephalographie

(EEG) in den Bereichen Gesang, Performance und Komposition.

UMS

'n JIP beteiligt sich aktiv am EU-Projekt i-Treasures,

das aus der UNESCO 2003 Konvention über die Erhaltung

des Immateriellen Kulturerbes (IKE/Intangible Cultural Heritage

ICH) hervorgegangen ist. Dieses Projekt fokussiert sich unter

anderem auf die Erfassung und Analyse von IKE mittels Untersuchung

fazialer Ausdrucksformen, Körperausdruck und Gestik,

Stimmeinsatz und -Modellierung, Klangbearbeitung und Elektroenzephalographie

(EEG) in den Bereichen Gesang, Performance und Komposition.

In diesem Rahmen arbeitet UMS 'n JIP in seinem

Forschungsprojekt 'i-Treasures Research' an einem 'Emotional

Brain Mapping'. Dieses hat zum Ziel, musikalische Prozesse

mittels Echtzeit-Hirnstrom-Analysen über Emotionen gezielt

steuern zu können. Die potentiellen Anwendungen der Forschungsergebnisse

dieses 'Emotional Brain Mapping's' lassen sich in drei Gruppen

einteilen:

1. Forschung & medizinische

Applikationen

1.a Integration in Lernprogramme bei

Verhaltenspathologien zur Linderung von Defiziten emotionaler

Expressivität (zB. Autismus)

1.b Verwendung zur eventuellen Wiederaktivierung/Nutzung

primitiver/archaischer emotionaler Bahnen bei Koma-Patienten

(awakening)

1.c Ermöglichung von emotionaler Kommunikation

mit locked-in-Patienten u.Ä..

2. Musik

2.a

Unterstützung bei der Entwicklung eines Instrumentes

zum gezielten Bewusstwerden und Erlernen des emotionalen Ausdrucks

und dessen Nutzung in der Komposition und Interpretation traditioneller

und zeitgenössischer Musik

2.b

Durch Einbezug der wissenschaftlichen

Erkenntnisse zur nonverbalen Kommunikation

bewusster Einsatz der Musik als Medium der interkulturellen

Kommunikation

3. Kommunikationstechnik

Erfassen wissenschaftlicher Erkenntnisse

zur nonverbalen Kommunikation in verschiedenen Kulturkreisen

und deren Nutzung zur Entwicklung neuer Methoden von Kommunikationstraining

und zur Erarbeitung interkultureller Schnittstellen in der

Kommunikation

|

|

| i-TREASURES

USE CASE 4: CONTEMPORARY MUSIC COMPOSITION

- EXAMPLE 1 (COMMON BRAIN) |

Leontios

Hadjileontiadis/Common Brain, 2013 (op. 86)

for male voice, sopranino and subbass recorders in F,

spring drum, two EEG Epoc Emotiv and live electronics

commissioned by UMS 'n JIP

live video/visuals by Jose Javier Navarro Lucas

world premiere: Basel (Switzerland), Gare du Nord,

14/MAR/2013

Common

Brain. The work is based on the construction of a

"common brain" in a bilateral perception; the one

that is being shared and the one that does not deviate from

the norm. Starting from the point of existence (through the

understanding of breathing), the multi-layering proliferation

of the neurons is developed, followed by the network-activation,

text-training, external-stimulation reaction and, final, emotional

responsiveness. The performers participate in a formation

of the sound-scape with the real-time acquisition of their

encephalogram and its transform to sound material, creating

an experiential perception of the "common brain"

in many different aspects of its development and formation.

The experiential stochasticity brings the "sound liquidness"

and continuous de/construction to the foreground, making them

as basic structural ingredients of the dynamically produced

composition in an alternative perspective, that of the, so

called, Biomusic.

text:

Fernando Pessoa ("Crónica da vida que passa…"

5 de Abril 1915, I.)

photos: link

samples (audio & video): link

|

|

| |

| ARISTOTLE

UNIVERSITY OF THESSALONIKI (AUTH) |

In

this research project, AUTH is represented by the Signal

Processing and Biomedical Technology Unit (SPBTU) of

the Telecommunications Laboratory of the Department of Electrical

and Computer Engineering (E&CE). The SPBTU serves educational

and research needs of the E&CE Department and its main scientific

areas of interest include the acquisition, processing, and pattern

analysis of biomedical data. Over the years, the unit has conducted

and published extensive research on the development of advanced

signal and image processing techniques with applications in

several fields of biomedical engineering such as electromyogram

(EMG)/electroencephalogram (EEG) processing, respiratory/cardiac

sounds processing, endoscopic/ magnetic resonance image analysis,

brain computer interfaces, music perception, biomusic, and affective

computing.

The

SPBTU has also attracted funds from state and private bodies

for several research projects targeting mainly at assisting

people with disabilities, i.e., SmartEyes (a mobile navigation

system for the blind) and Sign2Talk (a mobile system for the

conversion of sign language to speech, and vice versa, for the

deaf). The three faculty members and the three current doctoral

students of the unit have a strong background in experiment

design, biomedical data recordings, signal and image processing

algorithms, and machine learning. |

|

| LEONTIOS

HADJILEONTIADIS / composer |

|

Leontios

J. Hadjileontiadis was born in Kastoria, Greece in

1966. He received the Diploma degree in Electrical Engineering

in 1989 and the Ph.D. degree in Electrical and Computer Engineering

in 1997, both from the Aristotle University of Thessaloniki,

Thessaloniki, Greece. Since December 1999 he joined the Department

of Electrical and Computer Engineering, Aristotle University

of Thessaloniki, Thessaloniki, Greece as a faculty member,

where he is currently an Associate Professor, working on lung

sounds, heart sounds, bowel sounds, ECG data compression,

seismic data analysis and crack detection in the Signal Processing

and Biomedical Technology Unit of the Telecommunications Laboratory.

His research interests are in higher-order statistics, alpha-stable

distributions, higher-order zero crossings, wavelets, polyspectra,

fractals, neuro-fuzzy modeling for medical, mobile and digital

signal processing applications. Dr. Hadjileontiadis is a member

of the Technical Chamber of Greece, of the IEEE, of the Higher-Order

Statistics Society, of the International Lung Sounds Association,

and of the American College of Chest Physicians. He was the

recipient of the second award at the Best Paper Competition

of the ninth Panhellenic Medical Conference on Thorax Diseases‘97,

Thessaloniki. He was also an open finalist at the Student

paper Competition (Whitaker Foundation) of the IEEE EMBS‘97,

Chicago, IL, a finalist at the Student Paper Competition (in

memory of Dick Poortvliet) of the MEDICON‘98, Lemesos,

Cyprus, and the recipient of the Young Scientist Award of

the twenty-fourth International Lung Sounds Conference‘99,

Marburg, Germany. In 2004, 2005 and 2007 he organized and

served as a mentor to three five student teams that have ranked

as third, second and seventh worldwide, respectively, at the

Imagine Cup Competition (Microsoft), Sao Paulo, Brazil (2004)/Yokohama,

Japan (2005)/ Seoul, Korea (2007), with projects involving

technology-based solutions for people with disabilities. Dr.

Hadjileontiadis also holds a Ph.D. degree in music composition

(University of York, UK, 2004) and he is currently a Professor

in composition at the State Conservatory of Thessaloniki,

Greece.

|

|

| UMS

'n JIP / performers, audio programming |

|

_UMS

'n JIP

_Swiss

Contemporary Music Duo

_voice, recorder & electronics

_Ulrike Mayer-Spohn

_Javier Hagen

commissioning,

composing and performing contemporary music

scientific, technical and artistic research

More

than 600 concerts all over the world, over 150 commissioned

works, 6 chamber operas, 11 international awards since its

foundation in 2007, guest lectures and masterclasses at the

universities of Shanghai, Hong Kong, Adelaide, Basel, Riga,

Moscow, Istanbul and many more, UMS 'n JIP is yet one of the

most active and experienced contemporary music ensembles nowadays.

Due to their collaboration with Greek composer and neuroscientist

Leontios Hadjileontiadis while the Greece Project 2012, they

have been invited to participate in i-Treasures as experts

for composing, performing and programming contemporary music

as well as for scientific research concerning EEG brain maps.

more

|

|

|

|